Building a Data-Driven Cyber Risk Management Framework for Your Homelab

I treat my homelab like a tiny networked country. It has assets, borders, and badly behaved services. A Cyber Risk Management Framework gives that country a rulebook built on data, not guesses. This guide shows how I collect the right signals, turn them into repeatable risk scores, and use those scores to harden VLAN configuration and firewall rules.

Start with a clear inventory. Name every device, record IP, MAC, role, OS, criticality and whether it faces the internet. I use a simple CSV or a small SQLite database. That single source of truth keeps data-driven decisions honest.

What data to collect

- Network telemetry: DHCP logs, ARP tables, switch port mappings.

- Flow and packet alerts: Suricata, Zeek or a managed IDS feed.

- Vulnerability data: periodic Nmap + OpenVAS scans or Nessus if you prefer.

- Service exposure: open ports, TLS certificate expiry, SSH banners.

- Configuration drift: snapshots of firewall and router configs.

- Authentication anomalies: failed login spikes from services.

Practical collection tips

- Centralise logs. I ship firewall, IDS and host logs to a single server (ELK, Grafana Loki, or a flat-file approach if you prefer small scale). Central logs make correlation possible.

- Normalise fields early. Convert timestamps to UTC, standardise hostname labels and tag VLAN IDs. That makes automated rules simpler.

- Automate scans. Schedule daily light checks (port, cert expiry) and weekly deeper vulnerability scans. Keep scan schedules off-peak so the lab does not become unusable during tests.

- Add metadata. For each asset include a simple trust level (admin device, server, IoT, guest) and an owner field even if that owner is just you.

Example metrics to track

- Count of critical CVEs per host.

- Number of externally reachable ports per subnet.

- Mean time between failed logins.

- Number of IDS alerts per day, by rule.

Those metrics let you make data-driven decisions instead of guessing what is risky.

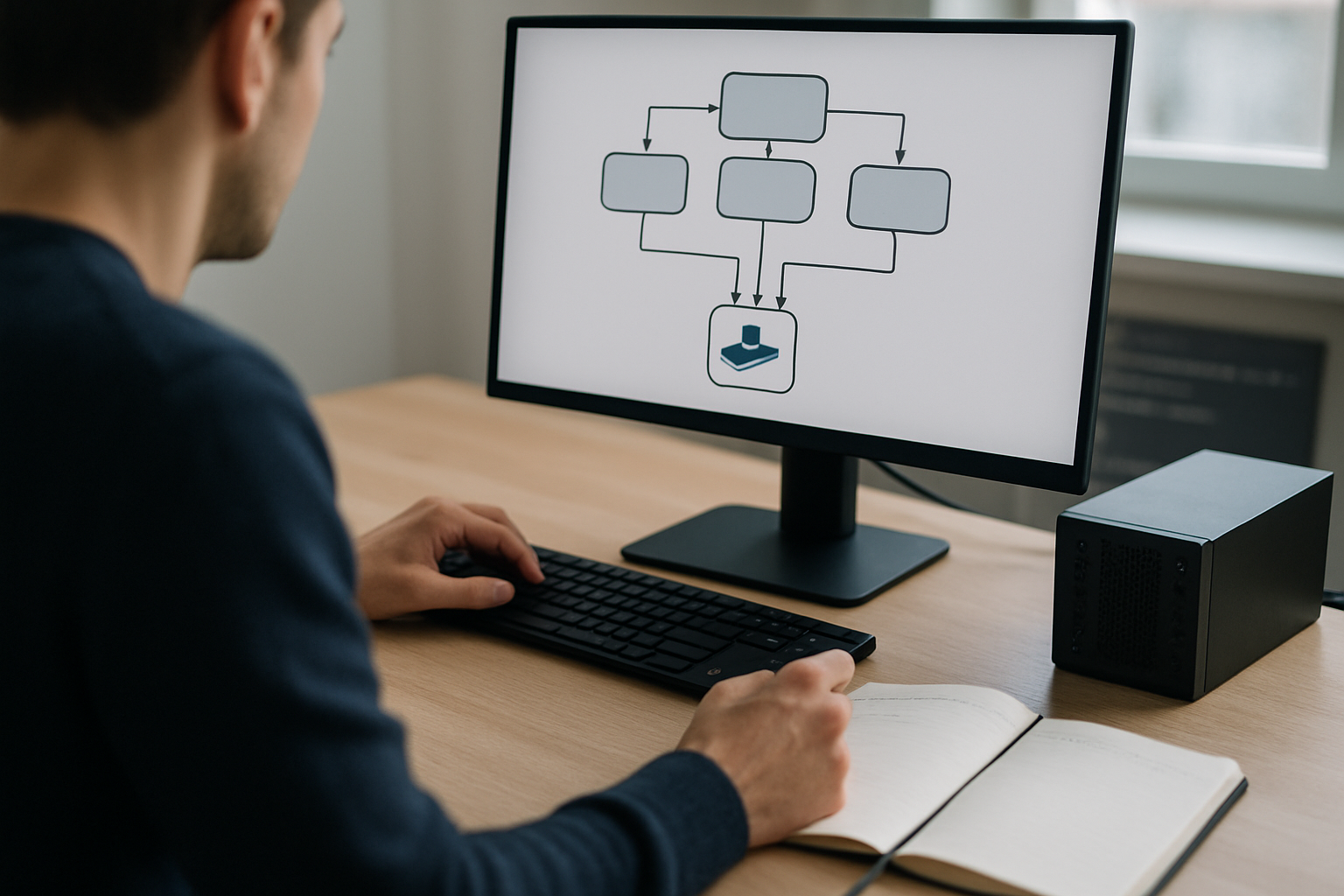

Turn metrics into risk and put controls where they matter

Keep the scoring simple. I use a 1–5 scale for likelihood and impact. Risk score = likelihood × impact. That gives a 1–25 number that is easy to threshold.

Scoring examples

- Likelihood 5: Internet-facing service with known exploit and no patch.

- Impact 5: Backup server or domain controller equivalent.

- Likelihood 2, Impact 1: Guest IoT device with no sensitive data.

Policies based on scores

- 16–25: Immediate action. Isolate the host, block inbound access, patch or rebuild.

- 8–15: Remediate within 72 hours. Restrict access and schedule a patch window.

- 1–7: Monitor. Log and trend for change.

How that drives VLAN configuration and firewall rules

- VLAN layout I use:

- VLAN 10 — Management (switch, hypervisor, monitoring)

- VLAN 20 — Servers (non-public services)

- VLAN 30 — IoT (low trust)

- VLAN 40 — Guest (internet-only)

- VLAN 50 — Public DMZ (internet-facing web, reverse proxies)

- Microsegmentation rule of thumb: deny east-west by default. Only allow traffic that serves an explicit function.

Sample firewall rules (conceptual)

- Default deny all between VLANs.

- Allow established/related on the router.

- Management VLAN → Server VLAN: allow TCP 22, 443, 5985 (where needed) from specific management hosts only.

- Server VLAN → Internet: allow HTTPS and necessary outbound ports; block SMB to internet.

- IoT VLAN → Internet: allow outbound HTTP/HTTPS only; block access to Server and Management VLANs.

- Guest VLAN → Internet: allow outbound web ports and DNS; deny access to local subnets.

Automating enforcement

- Convert risk scores to rule changes. Example: a host with critical vulns and high exposure moves into a quarantine VLAN automatically via a script that updates switch port assignments or applies a firewall tag.

- Use an orchestration tool or simple SSH scripts that push ACL changes to pfSense, OPNsense or a managed switch.

- Maintain configuration as code for firewall rules. Store rules in Git and tag rule changes with the risk trigger that caused them.

I had a Raspberry Pi serving a self-hosted app on a forwarded port. Daily port scans showed it exposed. Vulnerability scan returned a medium CVE. I scored likelihood 4, impact 2, risk 8. The policy required remediation within 72 hours. I moved the Pi to the IoT VLAN, removed the port forward, put the app behind a reverse proxy in the DMZ and scheduled an OS patch. The score dropped and the alert count fell to baseline.

Make dashboards that show trends, not single blips. A steady increase in IDS alerts over a week is more meaningful than a single noisy day.

Use simple visualisations, time series for alerts, bar charts for critical CVEs by host, and a heat map of risk scores by VLAN.

Routine work I run

- Weekly: quick port and cert checks, review new high-risk hosts.

- Monthly: full vulnerability scan and config snapshots.

- Quarterly: validate VLAN plan. Does the traffic map still match intent? Remove stale rules.

Avoid common traps

- Do not let the score become sacred. Use it to prioritise, not to decide everything automatically.

- Keep one dependable source of inventory. Multiple lists mean confusion.

- Do not hardcode credentials into automation scripts. Use vaulting or environment variables.

Treat the framework as a pipeline, collect, normalise, score, act, verify. Keep scoring simple and transparent so actions are defensible. Use VLAN configuration and firewall rules to enforce risk-based separation. Automate the boring bits and keep your dashboards showing trends. That turns gut feeling into repeatable, data-driven decisions about homelab security.